Back in Fall 2018, I took CS 534 (Computational Photography) at UW-Madison. The term project was open-ended, so naturally my team and I decided to see if we could get a neural network to generate video game sprites. You know, the little 32×32 pixel art icons you’d find in a dungeon crawler or fantasy RPG (swords, shields, slimes, floor tiles). The idea was straightforward: feed a GAN a bunch of CC-licensed sprite-sheets and see what comes out the other side. In practice, nothing about GANs is straightforward.

Please checkout the project website.

Summary

We built a data pipeline to extract, normalize, and package sprites from public sprite-sheets into a consistent 32×32 RGBA format. Then we trained two types of generative models: a standard DCGAN, and a hybrid that bolted an autoencoder onto the front of the GAN’s discriminator. The autoencoder learns a compressed representation of what a sprite “looks like” first; the GAN’s generator then tries to produce images that fool that learned representation. We trained separate models for three sprite categories (items and weapons, entities and humanoids, and environment tiles).

Did it produce gallery-ready pixel art? No. Did it produce recognizable, vaguely sprite-shaped blobs with coherent color palettes? Yes. For a semester project with limited compute and a few thousand training samples, that felt like a win.

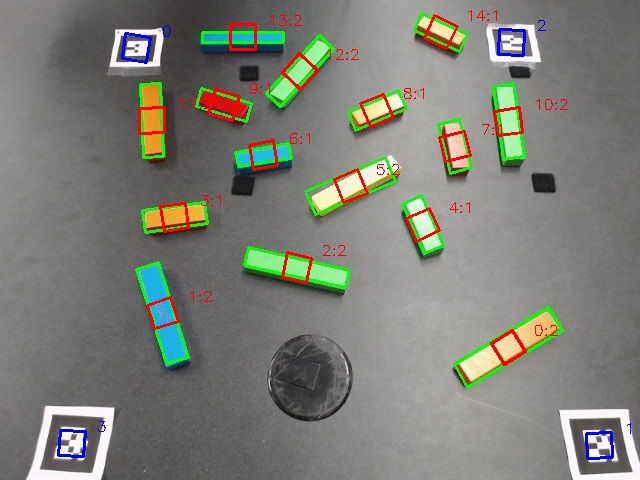

GAN generating a batch of body armor sprites.

Project Structure

The project is split across a few repos:

- GAN Models : Jupyter notebooks with the DCGAN and autoencoder-GAN implementations (Keras/TensorFlow)

- GAN MNIST : warmup experiments learning GANs on the MNIST digits dataset

- Sprite Data : data pipeline scripts for extraction, normalization, dimension sorting, and NumPy packaging

- Project Website : a Jekyll site with dataset links, results, and the write-up

Everything was trained on Google Colab.

Why Post this Now?

I’ve been cleaning out old project directories and figured this one deserved a reference post rather than just sitting in a folder. GANs have come a long way since 2018 (diffusion models have largely eaten this space), but there’s something satisfying about a project where you can trace the full arc from raw sprite-sheets to generated output in a handful of notebooks. It’s a good snapshot of where the tooling was, and the autoencoder-GAN comparison is still a useful pedagogical exercise.

Thanks for reading. Stay tuned and keep building.