Collaborative robots are showing up in more and more manufacturing facilities, but there’s a persistent gap between what cobots can do and what most operators know how to make them do. The programming and troubleshooting skills needed to get the most out of a cobot don’t come naturally, and existing training programs haven’t kept up.

During my time at the People and Robots Laboratory at UW-Madison, we built CoFrame, a training and programming environment designed to help novice operators learn to think about cobot applications the way experts do.

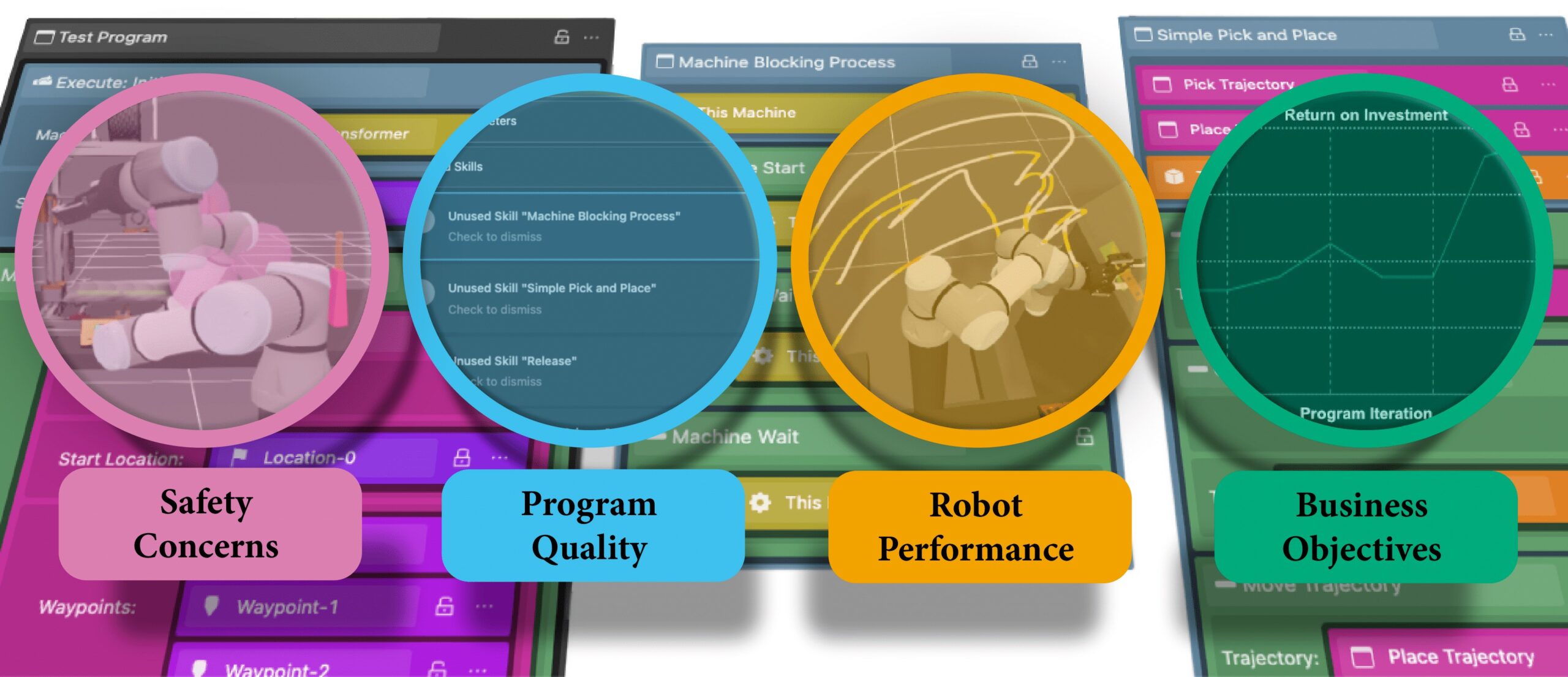

The starting point was an ethnographic study by Siebert-Evenstone et al. that interviewed cobot experts (engineers, implementers, trainers) to understand how they reason about application design. The key finding was a “Safety First” structure: experts keep safety concerns front and center while simultaneously weighing program quality, robot performance, and business objectives. We took that expert model and translated it into four Expert Frames that serve as lenses for evaluating a cobot program.

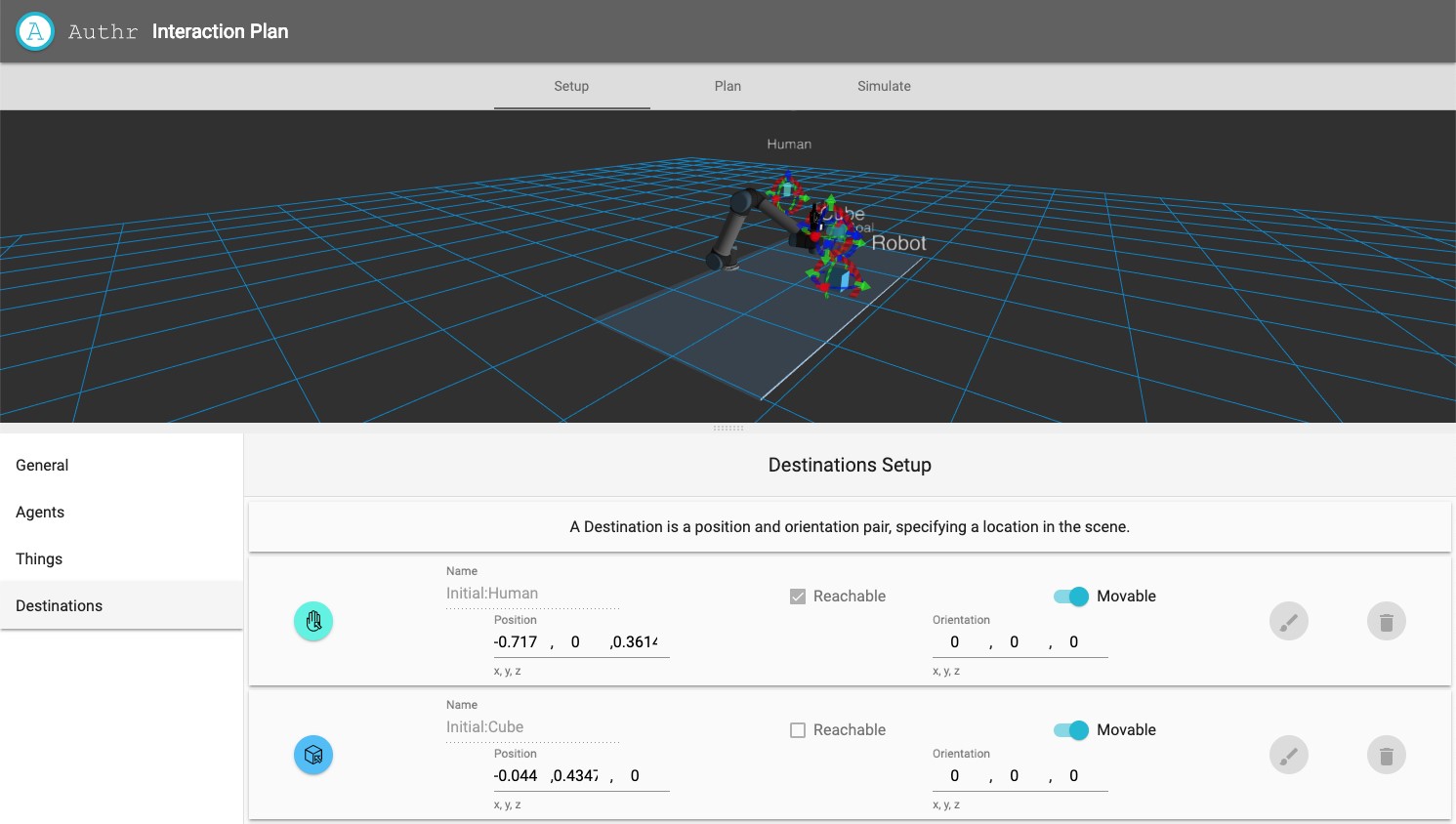

CoFrame itself is a web-based environment built with React, Three.js, and a PyBullet simulation backend. Operators build programs using a block-based visual programming language (think Blockly or Scratch), and as they work, the system automatically generates feedback organized by frame. Safety Concerns flags things like pinch points, collisions, and unsafe object handling. Program Quality catches missing parameters and logic errors. Robot Performance surfaces reachability, joint speed, and payload issues. Business Objectives tracks cycle time, idle time, and return on investment.

The frames aren’t independent, they have dependencies that guide the operator through a logical progression. You address reachability before worrying about pinch points; you fix missing parameters before the system can calculate cycle time. This mirrors how experts naturally layer their concerns.

We demonstrated the system through three case studies: defining a trajectory (and iterating through collision feedback), debugging a movement (balancing speed, pinch points, and space usage), and working with machines (handling initialization logic and unsafe object transport). Each case study shows how operators move between frames, building up the kind of interconnected reasoning that characterizes expert thinking.

The work was published at HRI 2022 in Sapporo, Japan. Source code is on GitHub.

Paper: